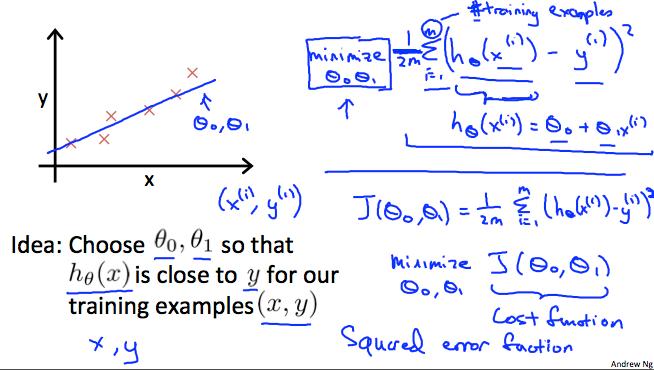

Cost Function

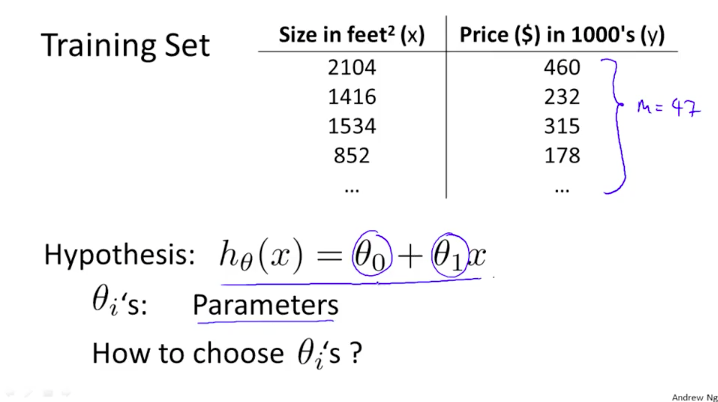

Using the housing price example again, we remember that our hypothesis function will predict the price of the house (y) based on the size in squared feet (x). Therefore our hypothesis, a linear regression equation in this case, is:

are parameters that will determine how our hypothesis look like.

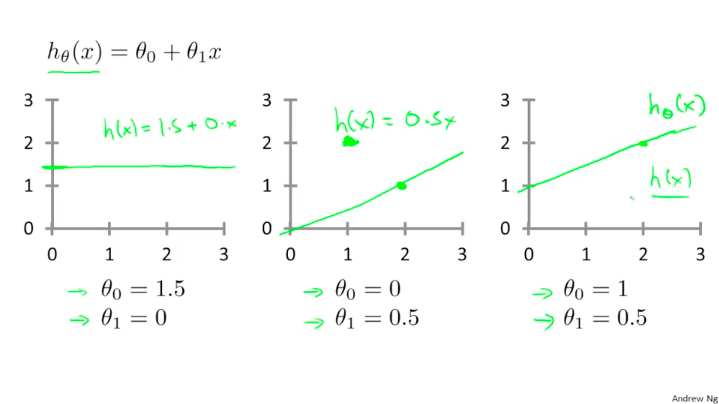

In the picture below, you see that different values produce a different hypothesis. When , we get a horizontal line that crosses the (0,1.5) point. When we get a straight line that passes through (0,0) and (2,1) with a gradient of 0.5. When , we get a straight line that passes through (0,1) and (2,2) with a gradient of 0.5.

Quiz

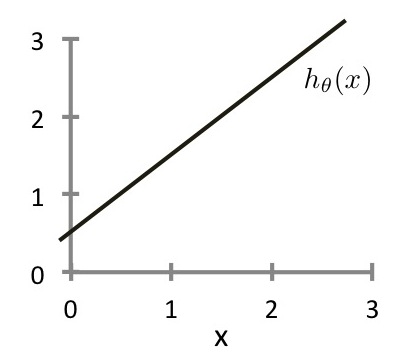

Consider the plot below of . What is and ?

- ( )

- (x)

- ( )

- ( )

We can measure the accuracy of our hypothesis function by using a cost function. This takes an average difference (actually a fancier version of an average) of all the results of the hypothesis with inputs from x's and the actual output y's.

To break it apart, it is where is the mean of the squares of , or the difference between the predicted value and the actual value.

This function is otherwise called the "Squared error function", or "Mean squared error". The mean is halved as a convenience for the computation of the gradient descent, as the derivative term of the square function will cancel out the term. The following image summarizes what the cost function does: